I remember many years ago seeing a Tweet from an online rock star that went a little something like this:

I just made an online business 20% extra revenue a year with one simple split test. CRO FTW!

Ah, the good old A/B test; it’s the answer to all our revenue-increasing prayers right? Come up with a hypothesis, set up a test and let the users decide which version converts best. Then simply sit back (probably on a beach somewhere with a cold beer) and watch the extra money role in; what could be more straightforward? And who doesn’t want to make their business shedloads of freaking money?

I’ve run multitudinous A/B and MV tests for well over 10 years across many high growth ecommerce brands, and I’ve certainly seen some great long term successes off the back of that.

But here’s the problem with what our online rock star tweeted (and I see those kind of assumptions a lot); often when you get to the year end and speak to FD’s or CFO’s in a business, all that extra guaranteed revenue that should have been achieved off the back of those statistically proven tests, simply isn’t there. Someone in the UX team/agency usually murmurs something around “changing market conditions” or “a differing marketing channel mix” but as you might have guessed by now, that’s not really the whole picture.

A little A/B test example

A short while ago, before COVID-19 messed up everyone’s plans, I ran a little test on a startup ecommerce site. The intention behind the test was to try and increase revenue from a key product category page; a crucial part of the ecommerce purchasing journey and a landing page on the site for many visitors.

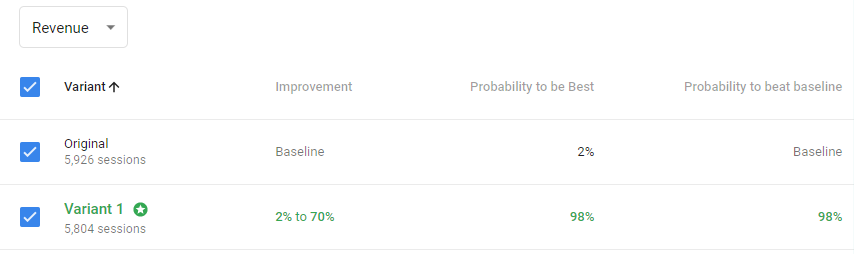

I ran the test in Google Optimize for a few weeks (with over 11,000 sessions) and was chuffed to bits when I achieved the following results:

Happy days! I’ve cut off the actual revenue figures due to confidentiality, but regardless, with 98% statistical certainty we can see that my new variation of the page performed significantly better than the original. Did a couple of high revenue orders maybe skew the results?

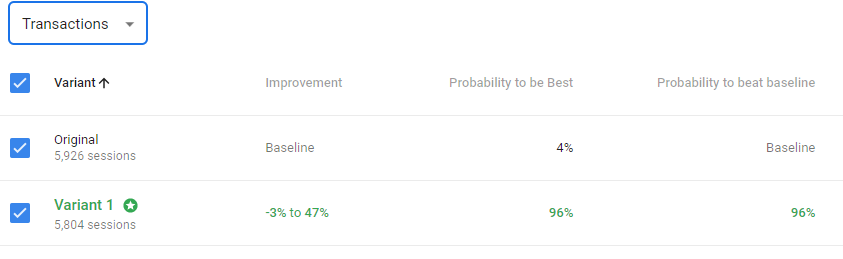

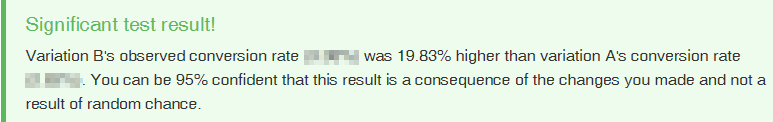

Nope! Based on transaction numbers, we can also see that the new page outperformed the old page in terms of conversion rate too, with 96% statistical certainty. Now, Google Optimize uses a Bayesian method to calculate A/B test results and although I personally don’t think it matters hugely when applied to ecommerce testing, not everyone is a fan of that method. So to make extra sure I was onto a winner, I also plugged the results into an online A/B test calculator which instead uses a Frequentist method to report statistical significance:

Winner! With such overwhelming statistical evidence that my test had outperformed the original page, I spent the next 24 hours strolling around like early-2016 Charles Martin:

There is though, just one teeny tiny problem with all this I should probably share. As you know, against the original page (version A) I tested a new version (version B) but both those pages were…… exactly the same.

Wait… what?

Now, depending on your level of CRO experience, you may or may not have seen this coming. What I set up above is often called an A/A test; 10+ years ago when I first came to the realisation that A/B testing is not as cut and dried as it may seem, not a lot of people had really written about A/A tests (in fairness, many of today’s popular online split testing tools hadn’t even launched at that point).

Indeed, I genuinely came across people who were utterly convinced that when presented with test results echoing the above, version A and version B must have been different (trust me, they weren’t) or that my split testing setup must be broken (trust me, it’s not); not since Kelly Rowland tried to text Nelly using Microsoft Excel have I seen such confusion:

You may well fall into the same boat, that’s perfectly OK; this blog is designed to be educational! You may also be thinking hey, if versions A and B are the same it’s not a “valid” test or that I should have used a much bigger sample size. Sure, but here’s the thing, I could have changed any number of things in version B and obtained exactly the same result.

For example, I could have changed the background image in the category header to a similar image, or changed the label on the “Shop Now” button to “Buy Now” or “Proceed to checkout” to “Continue to checkout”, the kind of changes I see UX teams and agencies test all the time but that in reality, if you sat behind a one way mirror and watched a million customers go through a moderated user test (and when you get to my age, it feels like you’ve watched a million moderated tests), they’re changes that would influence less than one of those million customers consciously (or sub consciously) in their decision to buy, or not to buy.

You may also be thinking that I’m looking at this at quite a macro level by focusing on revenue, and I should only be focusing on micro conversions (e.g. how many people clicked through to the next page). A fair point, but I could quite happily show you A/A test results for bounce rate with the same “false positive” outcome.

Nowadays, awareness of A/A testing is a lot more widespread than it was 10 years ago and a Google search provides plenty of articles. There are differing views out there but my view is A/A tests are inherently not very useful (unless you’re writing an informative blog post); if you need an A/A test to diagnose an issue with your split testing set up, something has already gone fundamentally wrong somewhere.

But what I’m really, really interested in is why there’s such a high chance of a false positive with ecommerce A/B tests; I’ve read estimates online from statisticians stating that 90% of web based split tests actually produce invalid results (ouch); i.e. 18 times more than the 5% you might expect if everyone out there is testing to a supposed 95% statistical significance. And based on real world experience, that fits with what I mentioned at the start of this post; so much statistically guaranteed additional revenue promised from split tests never actually makes it onto the bottom line. So, what’s going on?

It’s all about intent

The most fundamental piece of the puzzle to get your head around when applying split testing in an ecommerce environment is user intent; it’s really more important than any other aspect. Consider the following:

- When Royal Navy surgeon James Lind tested various cures to Scurvy simultaneously on different patients in 1747, the end goal was the same for all patients; to (of course) recover from the illness.

- When software creators split tested software variations in the 1970’s with live participants, the goal the participants were trying to achieve was always shared.

- When both Google and Bing tested the shade of blue links on their search result pages (and some have understandably questioned the validity of those tests), every user being shown a SERP had entered a search term with the intention of clicking a search result; the exact same intent was shared between all test participants.

You see the issue here? When a user lands on your ecommerce site, it could be for any one of an absolutely huge myriad of reasons. If users only visited an ecommerce website when they were ready to buy something, we’d all be sat basking in the glory of 80-90% conversion rates, it just doesn’t happen. Yet when we split test, we’re effectively making this huge unsaid assumption that all users to our site at least share some intent to achieve the goal we’re testing against.

They don’t.

Understanding intent to buy

I first wrote about the importance of intent to buy 10 years ago, and although my views have perhaps refined a little in that period, what I wrote back then still absolutely stands.

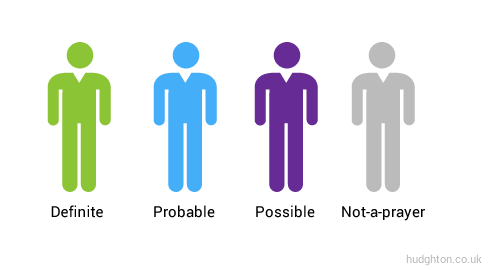

I like to categorise users into 4 distinct intent types:

You’ve probably worked this out already but to summarise:

- Definites will convert in the current session, barring the complete destruction of civilisation.

- Probables have strong intent and are likely to convert, but they could be put off.

- Possibles are unlikely to convert, but have sufficient intent that they could be persuaded.

- And the rest? They simply have no chance whatsoever of converting in the current session (that doesn’t mean you should ignore them though).

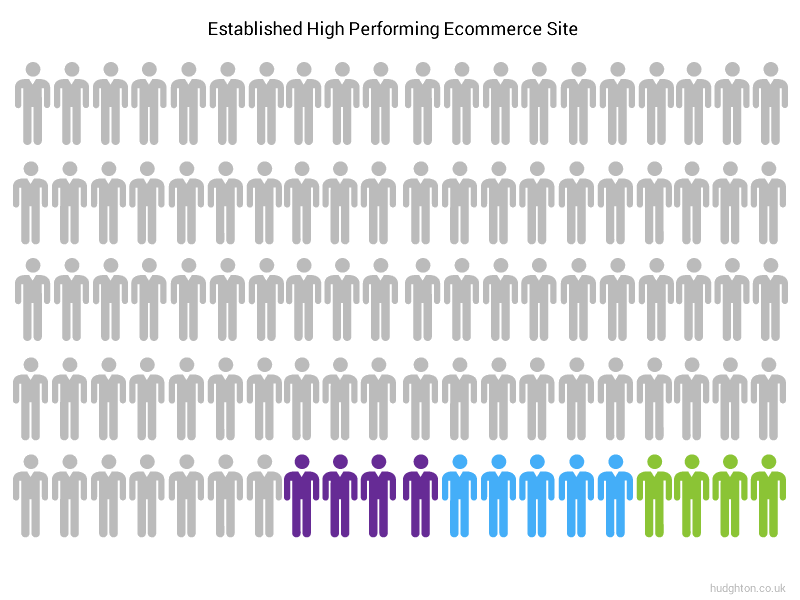

On this basis you can visually display the intent to buy profile for a website in an easily digestible way. If you have an established ecommerce website with a decent proportion of brand traffic, your intent to buy profile may look a little bit like this:

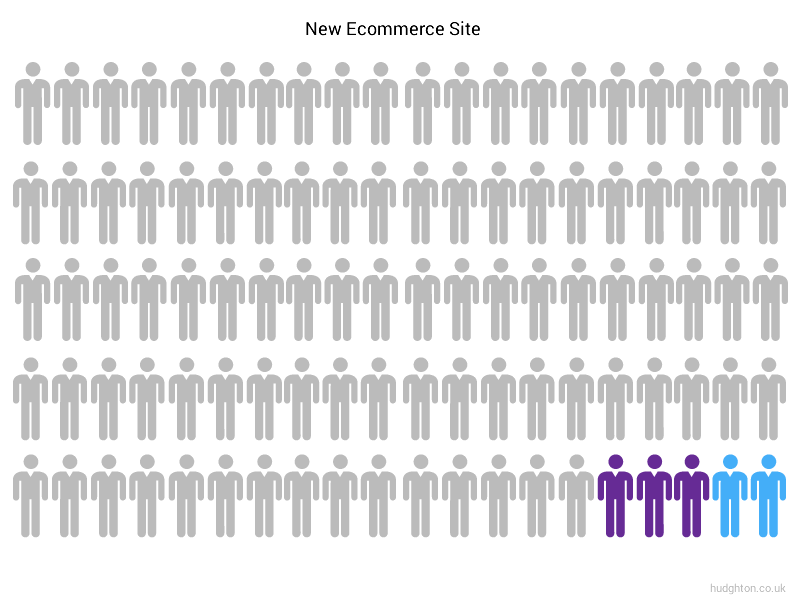

Whereas if your site is newer and not so established, your profile may look a bit more like this:

Those grey Not-a-prayer users are still important and they may well come back in a future session with more intent. But here’s the critical thing you need to remember; there is absolutely nothing you can do to your website UX to make them purchase in the current session. Whatever split test you shove in their lack-of-intent little faces won’t make any difference whatsoever; they simply won’t convert.

Likewise if you’re fortunate enough to have Definites visiting your site, the exact opposite applies. As long as it’s physically possible for that user to convert, they will, regardless of what split tests they’re subjected to during the conversion journey.

You could be testing the most sensational website improvement in the history of the internet, but if the user falls into that Not-a-prayer no-intent category (and that could easily be over 90% of the users you’re putting through the test), they are never, ever going to register a conversion against that test version.

It can be a little bit tough to get your head around this and talking about intent to buy in ecommerce fully would be a whole blog post on its own, but hopefully now you’re beginning to see why this is so important to consider when implementing website split tests.

Now look, intent to buy profiles are fluid and of course there are edge cases; if you reduce the price of everything on your site by 95% then your profile will look a lot different. But putting that aside and assuming you’re operating your ecommerce site under “normal” conditions, if there’s absolutely no way you can convert those Not-a-prayer users, and equally no way you can stop those Definite users converting, why the hell are you showing those groups a split test?

OK Jon I get it, but what can I do?

Of course, the reason that you’ll inevitably end up showing split tests to users you can’t influence is because segmenting users by intent is very, very difficult. In fact I’d go as far to say it’s currently impossible to do this with anything approaching 100% accuracy. Fortunately, there are some practical steps you can take to help improve the likelihood of a real measurable bottom line increase from your A/B test.

1) Consider A/B/A testing

I’ve been A/B/A testing by default for over a decade; in recent years I have seen a few other mentions online of this as a worthwhile strategy (I’m not claiming to have invented the concept by the way). Whilst adding another variation to a test does inherently increase the statistical chance of a false positive, this is massively outweighed in my practical experience by the extra validation provided. It’s also a pretty easy concept to digest for other people within a business when you’re trying to relay this data in order to drive change.

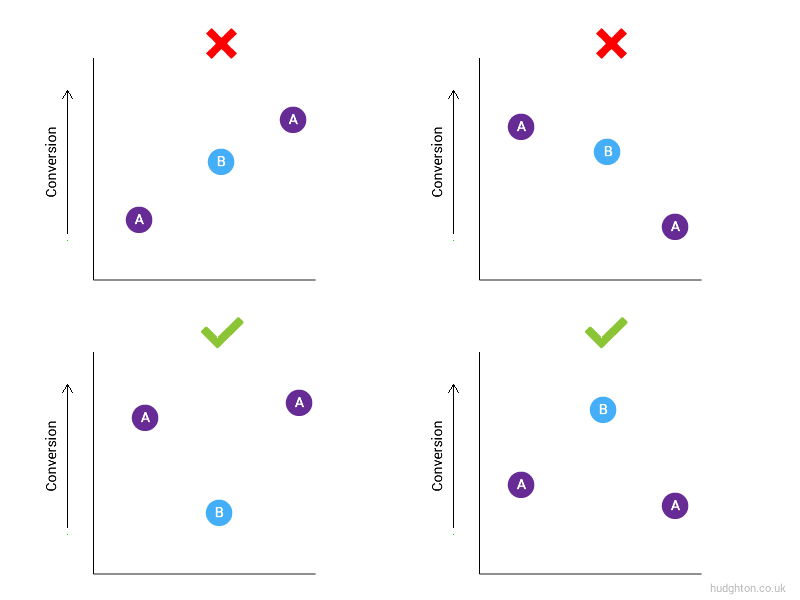

You don’t need to be focused on A and A returning exactly the same value as the chances are you’d be waiting a very long time for that to be the case. Assuming your split testing software is reporting a statistically significant victory (or defeat) for B, you’re really just looking for a mountain or a valley:

It’s still absolutely possible to get a false positive A/B/A test even if A and A show similar conversion rates and B is showing a statistically significant different result; you could run an A/A/A test and end up with any of the above graph profiles. But in my practical experience, the chance of getting a meaningful test result, that really has a genuine positive impact on your ecommerce site performance, is significantly increased if you A/B/A test.

2) Pay attention to the conversion path length

Broadly speaking, the shorter the distance between the test and the conversion goal, the more likely the user is to have some intent to convert. If you’re testing a change on your website homepage and measuring overall site conversion, that’s quite a gap and as I’ve explained above, many users subjected to that test will have zero intent to buy. Whereas if you’re running a test on the final checkout stage before purchase, the proportion of users with intent is likely to be much higher.

Don’t fall into the trap of thinking every user who adds to basket or even proceeds down the checkout funnel has intent to purchase in the current session, but when you’re considering where to focus testing resource in order to make a genuine impact on the site revenue generated, those shorter path lengths can often be a sensible place to start.

3) If in doubt, run the test again

Now look, I’m not saying re-run every test until you get the result you want, but as a product owner/client (with your extensive understanding of your customer base), if you’re looking at the results of a split test and thinking “that just doesn’t add up”, don’t be afraid to ask for the test to be run again.

Your UX team/agency should have absolutely no issue with an occasional request to do this; if the result is the same, fantastic, you’ve learnt something. But if not, you’ve removed any false expectation of the change making a difference to the bottom line of the business, and that’s invaluable knowledge to have. Ultimately, the line needs to go where you want it to go, although I’d suggest being slightly more tactful than Neil Godwin:

4) But above all else, think

Whatever your role in the split testing process, the most important thing you have to do is think. Before you test, engage your brain, put your knowledge of customer intent on the website in question to good use, and really think about whether the change you’re testing can actually influence the goal you’re measuring against.

Equally, once a test is complete and you’re analysing the results, don’t assume because your split testing software (be it Google Optimize, Optimzely, Visual Website Optimizer etc) is reporting a statistical winner, that your work is done. If you take nothing else from this post, please remember that a “statistically significant” test result from any of the above testing systems on an ecommerce website is only part of the story; don’t just blindly follow or relay those results in automatic expectation of a measurable impact on your website bottom line.

Of course, I’m still a huge advocate of A/B testing and a test & learn approach to drive incremental change on ecommerce sites; none of the above is intended to discredit split testing as a perfectly valid approach to aid growth. However, the more you understand what’s actually going on (instead of just blindly believing what your spilt testing software spits out) the more chance you have of really making a genuine long-term difference to your ecommerce site performance, and that can only be a good thing in my view.

0 Comments